Accountability Is a Feature

Designing Agentic Systems with Good Habits: Auditable Decisions

The “Why” Question

There is a specific question that kills agentic projects. It usually comes from a VP, a regulator, or an angry customer.

“Why did the agent do that?”

In a traditional system, you pull the logs. You see the if/else block. You see the variable state. You point to line 42 and say, “Because this condition was met.”

In an agentic system, the answer is often terrifyingly vague.

“The model felt like it.”

“The probability distribution leaned that way.”

“I don’t know.”

If you cannot explain why a decision was made, you do not own the system. You are just watching it.

Accountability is often treated as the boring part of AI—a compliance box to check. This is backwards. Accountability is a feature. It is the only thing that separates a tool you can trust from a toy you can only hope works.

This article is part of a series where I walk through how I think about designing agentic systems using a set of practical habits.

Rather than talking about agents in the abstract, I’m using a concrete example throughout the series: a customer support chatbot for a power utility company. This is not a real system and not something I’ve built. It’s a thought exercise designed to surface real design tradeoffs that show up in production systems.

The chatbot helps customers start and stop service, ask billing questions, report outages, request maintenance, challenge bills, apply for rebates, and request refunds. Behind the scenes, it interfaces with identity systems, billing platforms, internal policy documents, regulatory constraints, and operational workflows.

In other words, it looks simple from the outside, and complicated everywhere else.

Across this series, I evolve this system one habit at a time. Each article focuses on a single habit and shows how it changes the way the system is designed, where responsibility lives, and how risk is managed.

This article explores the final habit: Visible Accountability.

In the Agent Habits framework, this habit ensures that agent actions can be understood, traced, and owned after the fact.

Logs are not accountability

There is a misconception that if you save the chat logs, you have accountability.

You don’t. You have a transcript.

A transcript tells you what was said. It does not tell you why it was said.

Why did the agent choose the “Refund” tool instead of the “Credit” tool?

Which policy document did it reference?

Did it see the user’s VIP status, or did it miss it?

Accountability means designing the system so that every decision leaves a breadcrumb trail of intent, not just output.

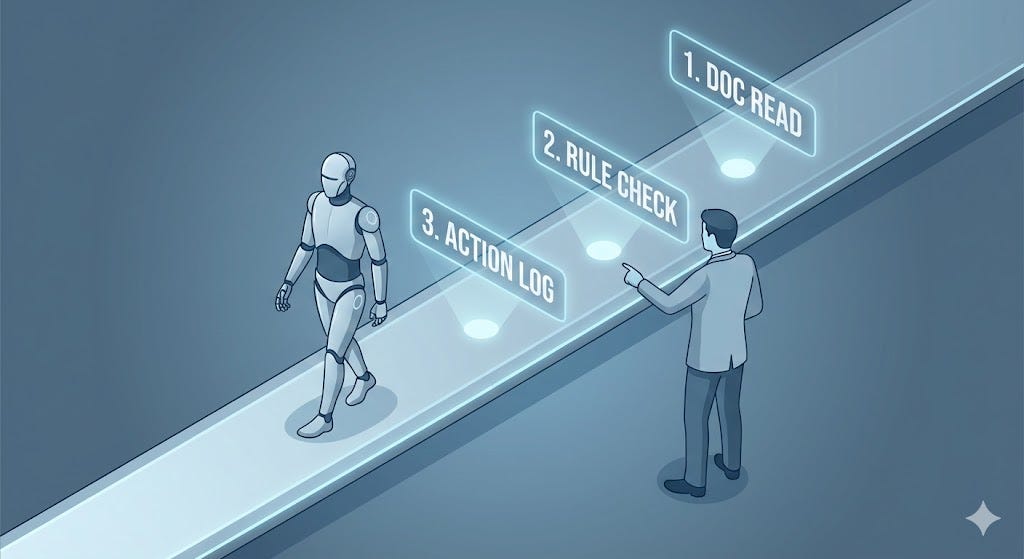

How to make decisions visible

Accountability isn’t magic; it’s architecture. You don’t need to “solve interpretability” to solve accountability. You just need to structure your agent’s output to include the “Why” alongside the “What.”

Here are three ways to apply this:

1. Force Decision Metadata

Don’t just let the agent perform an action. Require it to generate structured metadata explaining the action.

Bad: Agent calls

deny_refund(user_id).Good: Agent calls

deny_refund(user_id, reason_code=”POLICY_VIOLATION”, evidence=”claim_date_exceeds_30_days”).

2. Require Policy Citations

If an agent enforces a rule, it should cite the source. This is critical for debugging.

Bad: “I cannot process this request.”

Good: “I cannot process this request because it violates [Rule 4.2: Maximum Daily Allowance] defined in [Policy_Doc_v2].”

3. Link Automation to Owners

Every tool the agent uses should have a human owner. If the agent makes a mistake using the disconnect_service tool, the question isn’t just “Why did the model do that?” but “Who defined the safety checks for that tool?”

Implementation: Tag every tool definition with an

owner_team(e.g., “Billing Ops”). When the agent fails, route the ticket to that team, not the AI team.

Applying this to the utility chatbot

Imagine a customer complains that their refund was denied unfairly. They claim the chatbot was rude and ignored their warranty.

The Unaccountable System:

You read the chat. The bot said, “I’m sorry, I can’t do that.”

You look at the prompt. It says, “Be helpful.”

You look at the tool logs. No tools were called.

You have no idea why it refused. Was it a hallucination? A safety filter? A bad retrieval? You can’t fix it because you can’t see it.

The Accountable System:

You look at the Decision Trace:

Intent Classification:

Refund_Request(Confidence: 0.98)Context Retrieval: Pulled

Policy_Refunds_2024.pdf.Constraint Check:

User_Tenure < 30 days.Policy Rule Applied: “Refunds not available for new accounts.”

Action:

Defer_To_Human(Triggered by negative sentiment).

Now you know exactly what happened. The agent didn’t “fail.” It correctly applied a policy you forgot existed.

If the answer is “the agent decided,” the system has failed. The agent should surface the logic of the system, not obscure it.

Accountability enables learning

The real value of accountability isn’t about blaming the bot. It’s about fixing the business.

When you make decisions visible, failures become data.

If you see the agent consistently misinterpreting the “Solar Rebate” policy, you don’t rewrite the prompt. You rewrite the policy to be clearer.

If you see the agent escalating 40% of “Billing” queries, you know you have a gap in your toolset.

Without accountability, you are just guessing. With it, you are engineering.

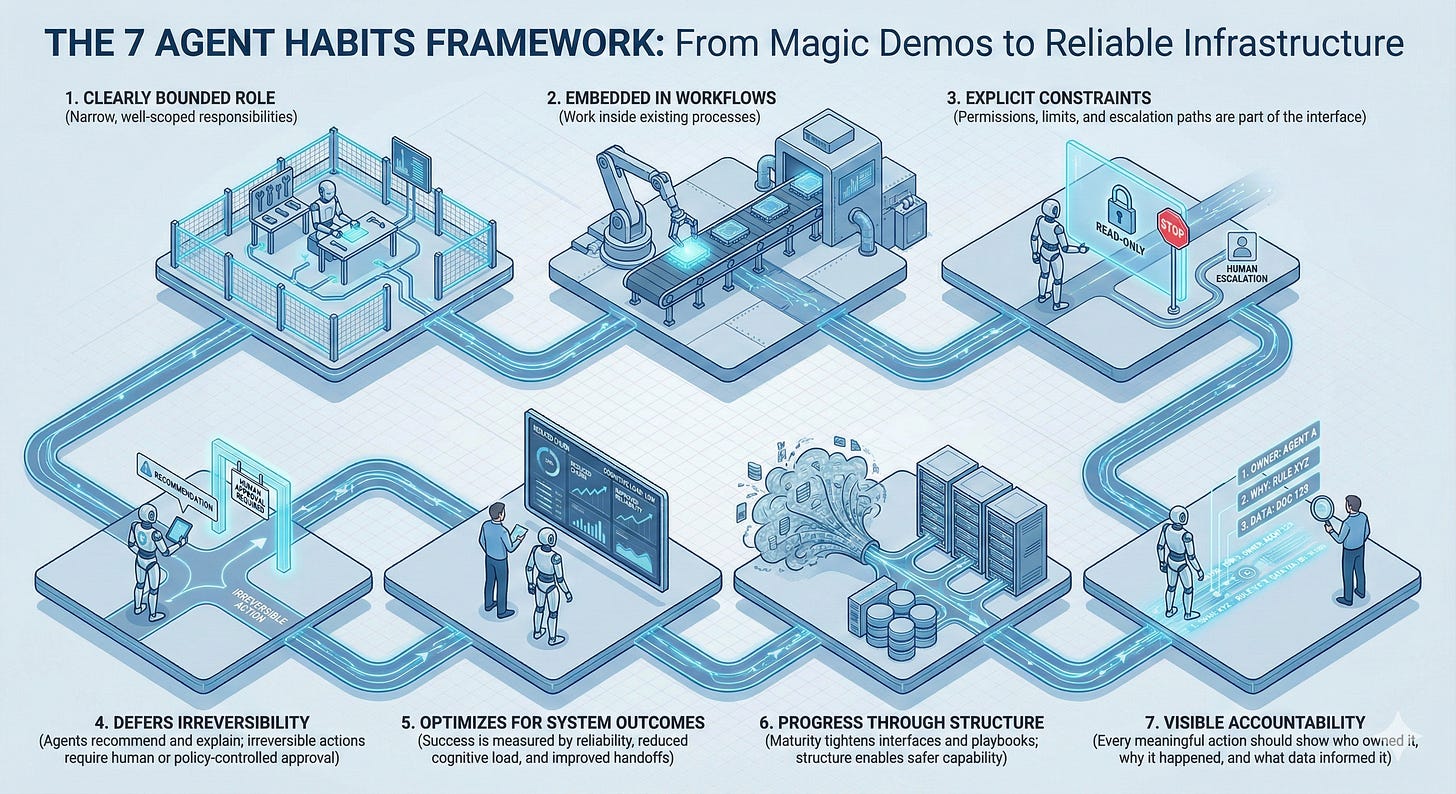

Closing the Loop: The 7 Habits

We have walked through seven habits that transform agentic systems from “magic demos” into reliable infrastructure.

Clearly Bounded Roles: Know what the agent is for.

Embedded in Workflows: Don’t let the agent own the process; let it be a step within it.

Explicit Constraints: Limits are about physics, not suggestions.

Defers Irreversibility: Escalation is a feature, not a failure.

Optimizes for System Outcomes: Measure the health of the business, not the speed of the bot.

Progress Through Structure: Mature systems get narrower and more predictable over time.

Visible Accountability: If you can’t explain why, you can’t fix what.

Building agents is easy. Building agentic systems that survive contact with reality is hard. These habits are the difference.

The broader habits framework lives here:

https://agent-habits.github.io/